You Can’t Handle the Truth about Facebook Ads, New Harvard Study Shows

MEDIA, 14 May 2018

Illustration: Scott Gelber for The Intercept

9 May 2018 – After it emerged that Facebook user data was illicitly harvested to help elect Donald Trump, the company offered weeks of apologies, minor reforms to how it shares such information, and a pledge to make itself “more transparent,” including new, limited disclosures around advertising. But Facebook still tells its 2 billion users very little about how it targets them for ads that represent essentially the whole of the company’s business. New research illuminates the likely reason why: The truth grosses people out.

The study, based on research conducted at Harvard Business School and published in the Journal of Consumer Research, is an inquiry into the tradeoffs between transparency and persuasion in the age of the algorithm. Specifically, it examines what happens if a company reveals to people how and why they’ve been targeted for a given ad, exposing the algorithmic trail that, say, inferred that you’re interested in discounted socks based on a constellation of behavioral signals gleaned from across the web. Such targeting happens to virtually everyone who uses the internet, almost always without context or explanation.

In the Harvard study, research subjects were asked to browse a website where they were presented with various versions of an advertisement — identical except for accompanying text about why they were being shown the ad. Time and time again, people who were told that they were targeted based on activity elsewhere on the internet were turned off and became less interested in what the ad was touting than people who saw no disclosure or were told that they were targeted based on how they were browsing the original site. In other words, if you track people across the internet, as Facebook routinely does, and admit that fact to them, the transparency will poison the resulting ads. The 449 paid subjects in the targeting research, who were recruited online, were about 24 percent less likely to be interested in making a purchase or visiting the advertiser if they were in the group that was told they were tracked across websites, researchers said.

“Ad transparency that revealed unacceptable information flows reduced ad effectiveness.”

In a related research effort described in the same study, a similar group of subjects was 17 percent less interested in purchasing if they had been told they’d been targeted for an advertisement based on “information that we inferred about you,” as compared to people who were told they were targeted based on information they themselves provided or who were told nothing at all. Facebook makes inferences about its users not only by leveraging third-party data, but also through the use of artificial intelligence.

It’s easy to see the conflict this represents for a company recently re-dedicated to transparency and honesty that derives much of its stock market value from opacity.

The paper inadvertently offers an answer to a crucial question of our time: Why won’t Facebook just level with us? Why all the long, vague transparency pledges and congressional evasion? The study concludes that when the data mining curtain is pulled back, we really don’t like what we see. There’s something unnatural about the kind of targeting that’s become routine in the ad world, this paper suggests, something taboo, a violation of norms we consider inviolable — it’s just harder to tell they’re being violated online than off. But the revulsion we feel when we learn how we’ve been algorithmically targeted, the research suggests, is much the same as what we feel when our trust is betrayed in the analog world.

The research was, as the study puts it, “premised on the notion that ad transparency undermines ad effectiveness when it exposes marketing practices that violate consumers’ beliefs about ‘information flows’ — how their information ought to move between parties.” So if a clothing store asks you for your email address so that it can send you promotional spam, you may not enjoy it, but you probably won’t consider it a breach of trust. But if that same store were, say, covertly following your movements between the aisles by tracking your cellphone, that would be unnerving, to say the least. Given that Facebook operates its advertising operation largely on the basis of data harvesting that’s conducted invisibly or behind the veil of trade secrecy, it has more in common with our creepy hypothetical retailer.

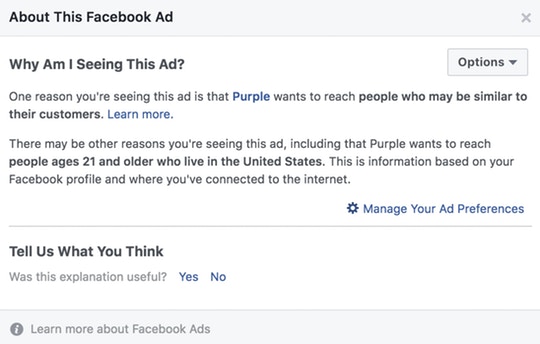

Facebook claims that it does offer advertising transparency in the form of a tiny, hard-to-locate button that will disclose an extremely vague summary of why you were targeted for a given ad:

“Conspicuous disclosure is uncommon in today’s marketplace,” the study notes. “Digital advertisements are not usually accompanied by information on how they were generated, and when they are, this information is typically inconspicuous, merely made available for the motivated consumer to find.” See above.

The research team tested what would happen if targeted ads were automatically accompanied with explanations of the targeting process, rather than requiring curious users to find the right button. The results are stark and telling:

Ad transparency reduced ad effectiveness when it revealed cross-website tracking — an information flow that consumers deem unacceptable, as identified by our inductive study. … Ad transparency that revealed unacceptable information flows heightened concern for privacy over interest in personalization, reducing ad effectiveness.

In other words, for the same reasons you might not actually want to look at the dingy kitchen that just cooked your greasy burger, ad transparency can be deeply alarming.

For those following Mark Zuckerberg’s various apologias this year, this sounds at odds with one of the Facebook CEO’s favorite lines: People actually want targeted ads. This rationale made a notable appearance during Zuckerberg’s first day of congressional testimony (emphasis added):

Senator, people have a control over how their information is used in ads in the product today. So if you want to have an experience where your ads aren’t — aren’t targeted using all the information that we have available, you can turn off third-party information.

What we found is that even though some people don’t like ads, people really don’t like ads that aren’t relevant. And while there is some discomfort for sure with using information in making ads more relevant, the overwhelming feedback that we get from our community is that people would rather have us show relevant content there than not.

According to Leslie John, an associate professor at Harvard Business School and one of the paper’s authors, this defense by Zuckerberg “oversimplifies things.” If internet users have no choice about whether they’ll have to see ads or not, they may prefer to see so-called relevant ads. But, as John wrote in a Harvard Business Review article accompanying her paper, “the research supporting ad personalization has tended to study consumers who were largely unaware that their data dictated which ads they saw.”

Or as John explained via email, “If I have to see ads, then yeah, I’d generally prefer ones that are relevant than not relevant but I’d add the qualifier: as long as I get the sense that you are treating my personal information properly. As soon as people feel that you are violating their privacy, they can become uneasy and understandably, distrustful of you.” Zuckerberg’s claim that you prefer to have your most personal information and online behavior tracked and analyzed on an industrial scale probably only checks out if you’re unaware it’s happening.

Assuming the validity of the research here, it’s no wonder Facebook doesn’t want to show its math: The ads that are its lifeblood will stop working as well. John agreed that “there’s a disincentive for firms to reveal unsavory information flows, so that could plausibly explain trying to hide it.” Facebook is, after all, one big, world-spanning, unsavory information flow.

_________________________________________

Sam Biddle – sam.biddle@theintercept.com

Sam Biddle – sam.biddle@theintercept.com

Go to Original – theintercept.com

DISCLAIMER: The statements, views and opinions expressed in pieces republished here are solely those of the authors and do not necessarily represent those of TMS. In accordance with title 17 U.S.C. section 107, this material is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. TMS has no affiliation whatsoever with the originator of this article nor is TMS endorsed or sponsored by the originator. “GO TO ORIGINAL” links are provided as a convenience to our readers and allow for verification of authenticity. However, as originating pages are often updated by their originating host sites, the versions posted may not match the versions our readers view when clicking the “GO TO ORIGINAL” links. This site contains copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available in our efforts to advance understanding of environmental, political, human rights, economic, democracy, scientific, and social justice issues, etc. We believe this constitutes a ‘fair use’ of any such copyrighted material as provided for in section 107 of the US Copyright Law. In accordance with Title 17 U.S.C. Section 107, the material on this site is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. For more information go to: http://www.law.cornell.edu/uscode/17/107.shtml. If you wish to use copyrighted material from this site for purposes of your own that go beyond ‘fair use’, you must obtain permission from the copyright owner.