How Laws Restricting Tech Actually Expose Us to Greater Harm

TECHNOLOGY, 29 Dec 2014

Cory Doctorow, Wired – TRANSCEND Media Service

We live in a world made of computers. Your car is a computer that drives down the freeway at 60 mph with you strapped inside. If you live or work in a modern building, computers regulate its temperature and respiration. And we’re not just putting our bodies inside computers—we’re also putting computers inside our bodies. I recently exchanged words in an airport lounge with a late arrival who wanted to use the sole electrical plug, which I had beat him to, fair and square. “I need to charge my laptop,” I said. “I need to charge my leg,” he said, rolling up his pants to show me his robotic prosthesis. I surrendered the plug.

You and I and everyone who grew up with earbuds? There’s a day in our future when we’ll have hearing aids, and chances are they won’t be retro-hipster beige transistorized analog devices: They’ll be computers in our heads.

And that’s why the current regulatory paradigm for computers, inherited from the 16-year-old stupidity that is the Digital Millennium Copyright Act, needs to change. As things stand, the law requires that computing devices be designed to sometimes disobey their owners, so that their owners won’t do something undesirable. To make this work, we also have to criminalize anything that might help owners change their computers to let the machines do that supposedly undesirable thing.

This approach to controlling digital devices was annoying back in, say, 1995, when we got the DVD player that prevented us from skipping ads or playing an out-of-region disc. But it will be intolerable and deadly dangerous when our 3-D printers, self-driving cars, smart houses, and even parts of our bodies are designed with the same restrictions. Because those restrictions would change the fundamental nature of computers. Speaking in my capacity as a dystopian science fiction writer: This scares the hell out of me.

If we are allowed to have total control over our own computers, we may enter a sci-fi world of unparalleled leisure and excitement.

The general-purpose computer is one of the crowning achievements of industrial society. Prior to its invention, electronic calculating engines were each hardwired to do just one thing, like calculate ballistics tables. John von Neumann’s “von Neumann architecture” and Alan Turing’s “Turing-complete computer” provided the theoretical basis for building a calculating engine that could run any program that could be expressed in symbolic language. That breakthrough still ripples through society, revolutionizing every corner of our world. When everything is made of computers, an improvement in computers makes everything better.

But there’s a terrible corollary to that virtuous cycle: Any law or regulation that undermines computers’ utility or security also ripples through all the systems that have been colonized by the general-purpose computer. And therein lies the potential for untold trouble and mischief.

Because while we’ve spent the past 70 years perfecting the art of building computers that can run every single program, we have no idea how to build a computer that can run every program except the one that infringes copyright or prints out guns or lets a software-based radio be used to confound air-traffic control signals or cranks up the air-conditioning even when the power company sends a peak-load message to it.

The closest approximation we have for “a computer that runs all the programs except the one you don’t like” is “a computer that is infected with spyware out of the box.” By spyware I mean operating-system features that monitor the computer owner’s commands and cancel them if they’re on a blacklist. Think, for example, of image scanners that can detect if you’re trying to scan currency and refuse to further process the image. As much as we want to prevent counterfeiting, imposing codes and commands that you can’t overrule is a recipe for disaster.

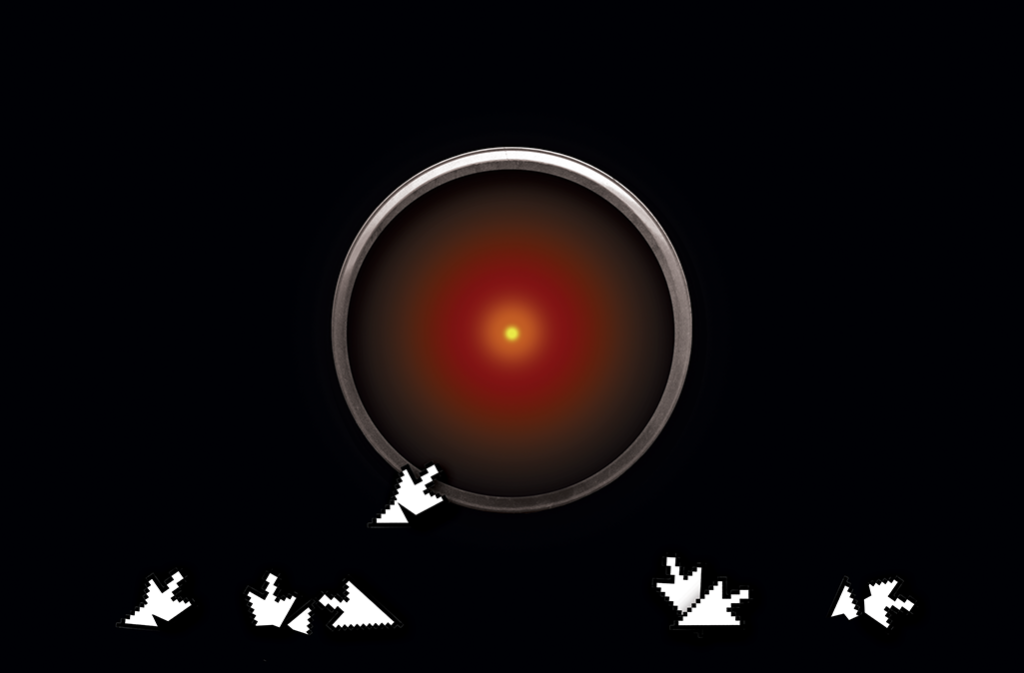

Why? Because for such a system to work, remote parties must have more privileges on it than the owner. And such a security model must hide its operation from the computer’s normal processes. When you ask your computer to do something reasonable, you expect it to say, “Yes, master” (or possibly “Are you sure?”), not “I CAN’T LET YOU DO THAT, DAVE.”

If the “I CAN’T LET YOU DO THAT, DAVE” message is being generated by a program on your desktop labeled HAL9000.exe, you will certainly drag that program into the trash. If your computer’s list of running programs shows HAL9000.exe lurking in the background like an immigration agent prowling an arrivals hall, looking for sneaky cell phone users to shout at, you will terminate that process with a satisfied click.

If your com- puter decides it can’t let you do some- thing, you’ll certainly want to drag that HAL9000 .exe file to the trash. Matt Dorfman

So the only way to sustain HAL9000.exe and its brethren—the programs that today keep you from installing non-App Store apps on your iPhone and tomorrow will try to stop you from printing gun.stl on your 3-D printer—is to design the computer to hide them from you. And that creates vulnerabilities that make your computer susceptible to malicious hacking. Consider what happened in 2005, when Sony BMG started selling CDs laden with the notorious Sony rootkit, software designed to covertly prevent people from copying music files. Once you put one of Sony BMG’s discs into your computer’s CD drive, it would change your OS so that files beginning with $sys$ were invisible to the system. The CD then installed spyware that watched for attempts to rip any music CD and silently blocked them. Of course, virus writers quickly understood that millions of PCs were now blind to any file that began with $sys$ and changed the names of their viruses accordingly, putting legions of computers at risk.

Code always has flaws, and those flaws are easy for bad guys to find. But if your computer has deliberately been designed with a blind spot, the bad guys will use it to evade detection by you and your antivirus software. That’s why a 3-D printer with anti-gun-printing code isn’t a 3-D printer that won’t print guns—the bad guys will quickly find a way around that. It’s a 3-D printer that is vulnerable to hacking by malware creeps who can use your printer’s “security” against you: from bricking your printer to screwing up your prints to introducing subtle structural flaws to simply hijacking the operating system and using it to stage attacks on your whole network.

This business of designing computers to deliberately weasel and lie isn’t the worst thing about the war on the general-purpose computer and the effort to bodge together a “Turing-almost-complete” architecture that can run every program except for one that distresses a government, police force, corporation, or spy agency.

No, the worst part is that, like the lady who had to swallow the bird to catch the spider that she’d swallowed to catch the fly, any technical system that stops you from being the master of your computer must be accompanied by laws that criminalize information about its weaknesses. In the age of Google, it simply won’t do to have “uninstall HAL9000.exe” return a list of videos explaining how to jailbreak your gadgets, just as videos that explain how to jailbreak your iPhone today could technically be illegal; making and posting them could potentially put their producers (and the sites that host them) at risk of prosecution.

This amounts to a criminal sanction for telling people about vulnerabilities in their own computers. And because today your computer lives in your pocket and has a camera and a microphone and knows all the places you go; and because tomorrow that speeding car/computer probably won’t even sport a handbrake, let alone a steering wheel—the need to know about any mode that could be exploited by malicious hackers will only get more urgent. There can be no “lawful interception” capacity for a self-driving car, allowing police to order it to pull over, that wouldn’t also let a carjacker compromise your car and drive it to a convenient place to rob, rape, and/or kill you.

If those million-eyed, fast-moving, deep-seated computers are designed to obey their owners; if the policy regulating those computers encourages disclosure of flaws, even if they can be exploited by spies, criminals, and cops; if we’re allowed to know how they’re configured and permitted to reconfigure them without being overridden by a distant party—then we may enter a science fictional world of unparalleled leisure and excitement.

But if the world’s governments continue to insist that wiretapping capacity must be built into every computer; if the state of California continues to insist that cell phones have kill switches allowing remote instructions to be executed on your phone that you can’t countermand or even know about; if the entertainment industry continues to insist that the general-purpose computer must be neutered so you can’t use it to watch TV the wrong way; if the World Wide Web Consortium continues to infect the core standards of the web itself to allow remote control over your computer against your wishes—then we are in deep, deep trouble.

The Internet isn’t just the world’s most perfect video-on-demand service. It’s not simply a better way to get pornography. It’s not merely a tool for planning terrorist attacks. Those are only use cases for the net; what the net is, is the nervous system of the 21st century. It’s time we started acting like it.

DISCLAIMER: The statements, views and opinions expressed in pieces republished here are solely those of the authors and do not necessarily represent those of TMS. In accordance with title 17 U.S.C. section 107, this material is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. TMS has no affiliation whatsoever with the originator of this article nor is TMS endorsed or sponsored by the originator. “GO TO ORIGINAL” links are provided as a convenience to our readers and allow for verification of authenticity. However, as originating pages are often updated by their originating host sites, the versions posted may not match the versions our readers view when clicking the “GO TO ORIGINAL” links. This site contains copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available in our efforts to advance understanding of environmental, political, human rights, economic, democracy, scientific, and social justice issues, etc. We believe this constitutes a ‘fair use’ of any such copyrighted material as provided for in section 107 of the US Copyright Law. In accordance with Title 17 U.S.C. Section 107, the material on this site is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. For more information go to: http://www.law.cornell.edu/uscode/17/107.shtml. If you wish to use copyrighted material from this site for purposes of your own that go beyond ‘fair use’, you must obtain permission from the copyright owner.