Amazon’s Accent Recognition Technology Could Tell the Government Where You’re From

WHISTLEBLOWING - SURVEILLANCE, 19 Nov 2018

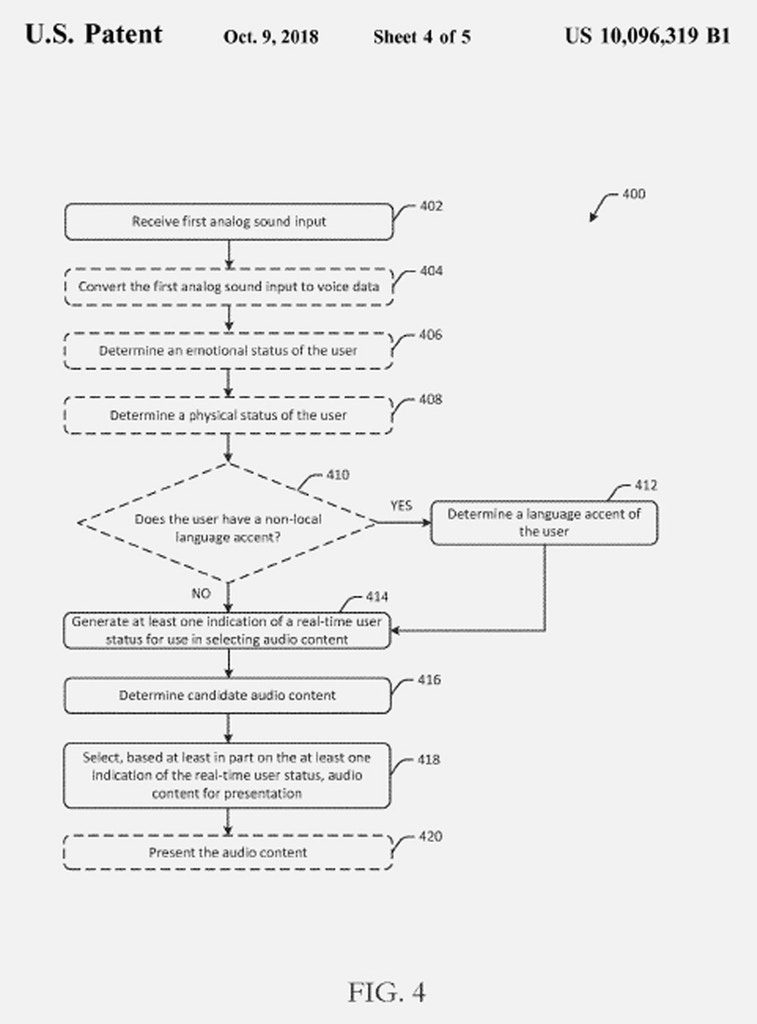

15 Nov 2018 – At the beginning of October, Amazon was quietly issued a patent that would allow its virtual assistant Alexa to decipher a user’s physical characteristics and emotional state based on their voice. Characteristics, or “voice features,” like language accent, ethnic origin, emotion, gender, age, and background noise would be immediately extracted and tagged to the user’s data file to help deliver more targeted advertising.

The algorithm would also consider a customer’s physical location — based on their IP address, primary shipping address, and browser settings — to help determine their accent. Should Amazon’s patent become a reality, or if accent detection is already possible, it would introduce questions of surveillance and privacy violations, as well as possible discriminatory advertising, experts said.

The civil rights issues raised by the patent are similar to those around facial recognition, another technology Amazon has used as an anchor of its artificial intelligence strategy, and one that it controversially marketed to law enforcement. Like facial recognition, voice analysis underlines how existing laws and privacy safeguards simply aren’t capable of protecting users from new categories of data collection — or government spying, for that matter. Unlike facial recognition, voice analysis relies not on cameras in public spaces, but microphones inside smart speakers in our homes. It also raises its own thorny issues around advertising that targets or excludes certain groups of people based on derived characteristics like nationality, native language, and so on (the sort of controversy that Facebook has stumbled into again and again).

From Amazon’s patent, an illustration of a process for determining physical and emotional characteristics from someone’s voice, resulting in tailored audio content like ads. Document: United States Patent and Trademark Office

Why the Government Might Be Interested in Accent Data

If voice-based accent detection can determine a person’s ethnic background, it opens up a new category of information that is incredibly interesting to the government, said Jennifer King, director of consumer privacy at Stanford Law School’s Center for Internet and Society.

“If you’re a company and you’re creating new classifications of data, and the government is interested in them, you’d be naive to think that law enforcement isn’t going to come after it,” she said.

She described a scenario in which knowing a user’s purchase history, existing demographic data, and whether they speak Arabic or Arabic-accented English, Amazon could identify the user as belonging to a religious or ethnic group. King said it’s plausible that the FBI would compel the production of such data from Amazon if it could help determine a user’s membership to a terrorist group. Data demands focused on terrorism are tougher for companies to fight, she said, as opposed to those that are vague or otherwise overbroad, which they have pushed back on.

Andrew Crocker, a senior staff attorney at the Electronic Frontier Foundation, said the Foreign Intelligence Surveillance Act, or FISA, makes it possible for the government to covertly demand such data. FISA governs electronic spying conducted to acquire information on foreign powers, allowing such monitoring without a warrant in some circumstances and in others under warrants issued by a court closed to the public, with only the government represented. The communications of U.S. citizens and residents are routinely acquired under the law, in many cases incidentally, but even incidentally collected communications may later be used against Americans in FBI investigations. Under FISA, the government could “get information in secret more easily, and there are mass or bulk surveillance capabilities that don’t exist in domestic law,” said Crocker. “Certainly it could be done in secret with less court oversight.”

“You’d be naive to think that law enforcement isn’t going to come after it.”

Jennifer Granick, a surveillance and cybersecurity lawyer at the American Civil Liberties Union’s Speech, Privacy, and Technology Project, suggested that Amazon’s accent data could also provide the government with information for the purpose of immigration control.

“Let’s say you have ICE go to one of these providers and say, ‘Give us all the subscription information of people who have Spanish accents’ … in order to identify people of a particular race or who theoretically might have relatives who are undocumented,” she said. “So you can see that this type information can definitely be abused.”

Though King said she hasn’t seen evidence of these types of government requests, she has witnessed “parallel things happen in other contexts.” It’s also possible that if Amazon was sent a National Security Letter by the FBI, a gag order would prevent the company from disclosing much, including the exact number of letters it received. National Security Letters compel the disclosure of certain types of information from communications firms, like a subpoena would, but often in secret. The letters require the companies to hand over select data, like the name of an account owner and the age of an account, but the FBI has routinely asked for more, including email headers and internet browsing history.

Compared to some other tech giants, however, Amazon is less detailed in its disclosures about National Security Letters it receives and about data requests in general. For example, in its information request reports, it does not disclose how many NSLs it has received or how many accounts are affected by national security requests, as Apple and Google do. These more specific disclosures from other companies show a trend: From mid-2016 to the first half of 2017, national security requests sent to Apple, Facebook, and Google increased significantly.

But even if the government hasn’t yet made such requests of Amazon, we know that it has been paying attention to voice and speech technology for some time. In January, The Intercept reported that the National Security Agency had developed technology not just to record and transcribe private conversations, but also to automatically identify speakers. An individual’s “voiceprint” was created, which could be cross-referenced with millions of intercepted and recorded telephone, video, and internet calls.

To create an American citizen’s “voiceprint,” which government documents don’t explicitly indicate has been done, experts said the NSA would need only to tap into Amazon or Google’s existing voice data.

Over the past year, Amazon’s relationship with the government has become increasingly cozy. BuzzFeed recently revealed details about how the Orlando Police Department was piloting Rekognition, Amazon’s facial recognition technology, to identify “persons of interest.” A few months earlier, Amazon was outed by the ACLU for “marketing Rekognition for government surveillance.” Meanwhile, in June, the company was busy pitching Immigration and Customs Enforcement officials on its technology.

Though these revelations have set off alarm bells, even for Amazon employees, experts said that speech recognition presents similar concerns that are equally if not more pressing. Amazon’s voice processing patent dates to March of last year. The company, in response to questions from The Intercept, described the patent as exploratory and pledged to abide by its privacy policy when collecting and using data.

Privacy Law Lags Behind Technology

Weak privacy laws in the U.S. are one reason consumers are vulnerable when tech companies start gathering new types of data about them. There is nothing in the law that protects data collected about a person’s mood or accent, said Granick.

In the absence of strong legal protections, consumers are forced to make their own decisions about trade-offs between their privacy and the convenience of virtual assistants. “Being able to use really robust voice control would be great if it meant you weren’t just being put into a giant AI algorithm and being used to improve your pitchability for new products, especially when you’re paying for these systems,” said King.

The Electronic Communications Privacy Act, or ECPA, first passed in 1986, was a major step forward in privacy protection at the time. But now, over 30 years later, it has yet to catch up with the pace of technological innovation. Generally, under ECPA, government agencies need a subpoena, court order, or search warrant to compel companies to disclose protected user information. Unlike court orders and search warrants, subpoenas don’t necessarily require judicial review.

Amazon’s most recent information request report, which covers the first half of this year, reveals that the company received 1,736 subpoenas during that time, 1,283 of which it turned over some or all of the information requested. Since Amazon began publishing these reports three years ago, the number of data requests it receives has steadily increased, with a huge jump between 2015 and 2016. Echo, its Alexa-enabled speaker for use at home, was released in 2015, and Amazon has not said whether the increase is related to the growing popularity of its speaker.

While ECPA protects the data associated with our digital conversations, Granick said its application to information collected by providers like Amazon is “anemic.” “The government could say, ‘Give us a list of everyone who you think is Chinese, Latino’ and the provider has to argue why they shouldn’t. That kind of conclusory data isn’t protected by ECPA, and it means the government can compel its disclosure with a subpoena,” she said.

“The government could say, ‘Give us a list of everyone who you think is Chinese, Latino.’”

Since ECPA is not explicit, there’s a legal question of whether Amazon could voluntarily turn over the conversations its users have with Alexa. Granick said Amazon could argue that such data is a protected electronic communication under ECPA — and require that the government get a warrant to access it — but as a party to the communication, Amazon also has the right to divulge it. There have been no court cases addressing the issue so far, she said.

Crocker, of the EFF, argued that communications with Alexa — voice searches and commands, for example — are protected by ECPA, but agreed that the government could obtain the data through a warrant or other legal process.

He added that the way Amazon stores accent information could impact the government’s ability to access it. If Amazon keeps it stored long term in customer profiles in the cloud, those profiles are easier to obtain than real-time voice communications, the interception of which invokes protections under the Wiretap Act, a federal law governing the interception and disclosure of communications. (ECPA amended the Wiretap Act to include electronic communications.) And if it’s stored in metadata associated with Alexa voice searches, the government has obtained those in past cases and could get to it that way.

In 2016, a hot tub murder in Arkansas put in the spotlight the defendant’s Echo. Police seized the device and tried to obtain its recordings, prompting Amazon to argue that any communications with Alexa, and its responses, were protected as a form of free speech under the First Amendment. Amazon eventually turned over the records after the defendant gave his permission to do so. Last week, a New Hampshire judge ordered Amazon to turn over Echo recordings in a new murder case, again hoping it could provide criminal evidence.

Dynamic, Targeted, and Discriminatory Ads

Beyond government surveillance, experts also expressed concern that Amazon’s new classification of data increases the likelihood of discriminatory advertising. When demographic profiling — the basis of traditional advertising — is layered with additional, algorithmically assessed information from Alexa, ads can quickly become invasive or offensive.

Granick said that if Amazon “gave one set of [housing] ads to people who had Chinese accents and a different set of ads to people who have a Finnish accent … highlighting primarily Chinese neighborhoods for one and European neighbors for another,” it would be discriminating on the basis of national origin or race.

King said Amazon also opens itself to charges of price discrimination, and even racism, if it allows advertisers to show and hide ads from certain ethnic or gender groups. “If you live in the O.C. and you have a Chinese accent and are upper middle-class, it could show you things that are higher price. [Alexa] might say, ‘I’m gonna send you Louis Vuitton bags based on those things,’” she said.

Selling products based on emotions also offers opportunities for advertisers to manipulate consumers. “If you’re a woman in a certain demographic and you’re depressed, and we know that binge shopping is something you do … knowing that you’re in kind of a vulnerable state, there’s no regulation preventing them from doing something like this,” King said.

An example from the patent envisions marketing to Chinese speakers, albeit in a more innocuous context, describing how ads might be targeted to “middle-aged users who speak Mandarin or have a Chinese accent and live in the United States.” If the user asks, “Alexa, what’s the news today?” Alexa might reply, “Before your news brief, you might be interested in the Xiaomi TV box, which allows you to watch over 1,000 real-time Chinese TV channels for just $49.99. Do you want to buy it?”

According to the patent, the ads may be presented in response to user voice input, but could also be “presented at any time.” They could even be injected into existing audio streams, such as a news briefing or playback of tracks from a music playlist.

Andrew DeVore, vice president and associate general counsel with Amazon.com Inc., right, listens as Len Cali, senior vice president of global public policy with AT&T Inc., speaks during a Senate Commerce Committee hearing on consumer data privacy in Washington, D.C., on Sept. 26, 2018.

Photo: Andrew Harrer/Bloomberg via Getty Images

New Rules Emerge for Data Privacy

In the wake of Facebook’s Cambridge Analytica scandal, lawmakers have grown increasingly wary of tech companies and their privacy practices. In late September, the Senate Commerce Committee held a fresh round of hearings with tech executives on the issue — also giving them an opportunity to explain how they’re addressing the new, stringent data privacy laws in the European Union and California.

California’s regulation, which passed in June and goes into effect in 2020, sets a new precedent for consumer privacy law in the country. It expands the definition of personal information and gives state residents greater control over the sharing and sale of their data to third parties.

Unsurprisingly, Amazon and other big tech companies pushed back forcefully on the new reforms, citing excessive penalties, compliance costs, and data collection restrictions — and each spent nearly $200,000 to defeat it. During the Senate hearing, Amazon Vice President and Associate General Counsel Andrew DeVore asked the committee to consider the “unintended consequences” of California’s law, which he called “confusing and difficult to comply with.”

Now that the midterm elections have passed, privacy advocates hope that congressional interest in privacy issues will turn into legislative action; thus far, it has not. The Federal Trade Commission is also considering updates to its consumer protection enforcement priorities. In September, it kicked off a series of hearings examining the impact of “new technologies” and other economic changes.

Advocates hope congressional interest in privacy issues will turn into legislative action; thus far, it has not.

Granick said that as states move to protect consumers where the federal government has not, California could serve as a model for the rest of the country. In August, California also became the first state to pass an internet of things cybersecurity law, requiring that manufacturers add a “reasonable security feature” to protect the information it collects from unauthorized access, modification, or disclosure.

In 2008, Illinois became the first state to pass a law regulating biometric data, placing restrictions on the collection and storing of iris scan, fingerprint, voiceprint, hand scan, and face geometry data. (Granick says it’s unclear if accent data is covered under the law.) Being the first state to pass landmark legislation, Illinois presents a cautionary tale for California. Though its bill was once considered a model law, only two other states — Texas and Washington — have passed biometric privacy laws over the past 10 years. Similar efforts elsewhere were largely killed by corporate lobbying.

A Growing and Global Problem

Activists have looked to other countries as examples of what could go wrong if tech companies and government agencies become too friendly, and voice accent data gets misused.

Human Rights Watch reported last year that the Chinese government was creating a national voice biometric database using data from Chinese tech company iFlyTek, which provides its consumer voice recognition apps for free and claims its system can support 22 Chinese dialects. On its English website, iFlyTek said that its technology has been “inspected and praised” by “many party and state leaders,” including President Xi Jinping and Premier Li Keqiang.

The company is also the supplier of voice pattern collection systems used by regional police bureaus and runs a lab that develops voice surveillance technology for the Ministry of Public Security. Its technology has “helped solve cases” for law enforcement in Anhui, Gansu, Tibet, and Xinjiang, according to a state press report cited by Human Rights Watch. Activists warn that one possible use of the government’s voice database, which could contain dialect and accent-rich voice data from minority groups, is the surveillance of Tibetans and Uighurs.

Last year, Die Welt reported that the German government was testing voice analysis software to help verify where its refugees are coming from. They hoped it would determine the dialects of people seeking asylum in Germany, which migration officers would use as one of several “indicators” when reviewing applications. The test was met with skepticism, as speech experts questioned the ability of software to make such a complex determination.

The amount of information people voluntarily give tech companies through smart speakers is growing, along with the purchases users are allowed to make. A Gallup survey conducted last year found that 22 percent of Americans currently use smart home personal assistants like Echo — placing them in living rooms, kitchens, and other intimate spaces. And 44 percent of U.S. adult internet users are planning to buy one, according to a Consumer Technology Association study.

Amazon’s move into the home with more sophisticated voice abilities for Alexa has been a long time coming. In 2016, it was already discussing emotion detection as a way to stay ahead of competitors Google and Apple. Also that year, it filed a patent application for a real-time language accent translator — a blend of accent detection and translation technologies. When its emotion and accent patent was issued last month, Alexa’s potential ability to read emotions and detect if customers are sick was called out as creepy.

Amazon Grapples With an “Accent Gap”

Amazon’s current accent handling capabilities are lackluster. In July, the Washington Post charged that Amazon and Google had created an “accent gap,” leaving non-native English speakers behind in the voice-activated technology revolution. Both Alexa and Google Assistant had the most difficulty understanding Chinese- and Spanish-accented English.

Since the advent of speech recognition technology, picking up on dialects, speech impediments, and accents has been a persistent challenge. If the technology in Amazon’s patent was available today, natural language processing experts said that the accent and emotion detection would not be able to draw precise conclusions. The training data that teaches artificial intelligence lacks diversity in the first place, and because language itself is constantly changing, any AI would have a hard time keeping up.

Though Amazon’s new patent is a sign that it’s paying attention to the “accent gap,” it may be doing so for the wrong reasons. Improved language accent detection makes voice technology more equitable and accessible, but it comes at a cost.

Regarding Patents

Patents are not a surefire sign of what tech companies have built, or what is even possible for them to build. Tech companies in particular submit a dizzying number of patent applications.

In an emailed statement, Amazon said that it filed “a number of forward-looking patent applications that explore the full possibilities of new technology. Patents take multiple years to receive and do not necessarily reflect current developments to products and services.” The company also said that it “will only collect and use data in accordance with our privacy policy,” and did not elaborate on other uses of its technology or data.

But King, who has also reviewed numerous Facebook patents, said that they can be used to infer the direction a company is headed.

“You’re seeing a future where the interactions with people and their interior spaces is getting a lot more aggressive,” she said. “That’s the next frontier for companies. Not just tracking your behavior, where you’ve gone, what they think you might buy. Now it’s what you’re thinking, feeling, and that is what makes people deeply uncomfortable.”

For now, people who want to hold onto their privacy and minimize surveillance risk shouldn’t buy a speaker at all, recommended Granick. “You’re basically installing a microphone for the government to listen in to you in your home,” she said.

________________________________________________

Related:

- Forget About Siri and Alexa — When It Comes to Voice Identification, the “NSA Reigns Supreme”

- Interpol Rolls Out International Voice Identification Database Using Samples From 192 Law Enforcement Agencies

- Facebook Allowed Advertisers to Target Users Interested in “White Genocide” — Even in Wake of Pittsburgh Massacre

Belle Lin – belle.lin@theintercept.com

Go to Original – theintercept.com

DISCLAIMER: The statements, views and opinions expressed in pieces republished here are solely those of the authors and do not necessarily represent those of TMS. In accordance with title 17 U.S.C. section 107, this material is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. TMS has no affiliation whatsoever with the originator of this article nor is TMS endorsed or sponsored by the originator. “GO TO ORIGINAL” links are provided as a convenience to our readers and allow for verification of authenticity. However, as originating pages are often updated by their originating host sites, the versions posted may not match the versions our readers view when clicking the “GO TO ORIGINAL” links. This site contains copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available in our efforts to advance understanding of environmental, political, human rights, economic, democracy, scientific, and social justice issues, etc. We believe this constitutes a ‘fair use’ of any such copyrighted material as provided for in section 107 of the US Copyright Law. In accordance with Title 17 U.S.C. Section 107, the material on this site is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. For more information go to: http://www.law.cornell.edu/uscode/17/107.shtml. If you wish to use copyrighted material from this site for purposes of your own that go beyond ‘fair use’, you must obtain permission from the copyright owner.

Read more

Click here to go to the current weekly digest or pick another article:

WHISTLEBLOWING - SURVEILLANCE: