Homeland Security Will Let Computers Predict Who Might Be a Terrorist on Your Plane — Just Don’t Ask How It Works

WHISTLEBLOWING - SURVEILLANCE, 10 Dec 2018

3 Dec 2018 – You’re rarely allowed to know exactly what’s keeping you safe. When you fly, you’re subject to secret rules, secret watchlists, hidden cameras, and other trappings of a plump, thriving surveillance culture. The Department of Homeland Security is now complicating the picture further by paying a private Virginia firm to build a software algorithm with the power to flag you as someone who might try to blow up the plane.

The new DHS program will give foreign airports around the world free software that teaches itself who the bad guys are, continuing society’s relentless swapping of human judgment for machine learning. DataRobot, a northern Virginia-based automated machine learning firm, won a contract from the department to develop “predictive models to enhance identification of high risk passengers” in software that should “make real-time prediction[s] with a reasonable response time” of less than one second, according to a technical overview that was written for potential contractors and reviewed by The Intercept. The contract assumes the software will produce false positives and requires that the terrorist-predicting algorithm’s accuracy should increase when confronted with such mistakes. DataRobot is currently testing the software, according to a DHS news release.

The contract also stipulates that the software’s predictions must be able to function “solely” using data gleaned from ticket records and demographics — criteria like origin airport, name, birthday, gender, and citizenship. The software can also draw from slightly more complex inputs, like the name of the associated travel agent, seat number, credit card information, and broader travel itinerary. The overview document describes a situation in which the software could “predict if a passenger or a group of passengers is intended to join the terrorist groups overseas, by looking at age, domestic address, destination and/or transit airports, route information (one-way or round trip), duration of the stay, and luggage information, etc., and comparing with known instances.”

DataRobot’s bread and butter is turning vast troves of raw data, which all modern businesses accumulate, into predictions of future action, which all modern companies desire. Its clients include Monsanto and the CIA’s venture capital arm, In-Q-Tel. But not all of DataRobot’s clients are looking to pad their revenues; DHS plans to integrate the code into an existing DHS offering called the Global Travel Assessment System, or GTAS, a toolchain that has been released as open source software and which is designed to make it easy for other countries to quickly implement no-fly lists like those used by the U.S.

According to the technical overview, DHS’s predictive software contract would “complement the GTAS rule engine and watch list matching features with predictive models to enhance identification of high risk passengers.” In other words, the government has decided that it’s time for the world to move beyond simply putting names on a list of bad people and then checking passengers against that list. After all, an advanced computer program can identify risky fliers faster than humans could ever dream of and can also operate around the clock, requiring nothing more than electricity. The extent to which GTAS is monitored by humans is unclear. The overview document implies a degree of autonomy, listing as a requirement that the software should “automatically augment Watch List data with confirmed ‘positive’ high risk passengers.”

The document does make repeated references to “targeting analysts” reviewing what the system spits out, but the underlying data-crunching appears to be almost entirely the purview of software, and it’s unknown what ability said analysts would have to check or challenge these predictions. In an email to The Intercept, Daniel Kahn Gillmor, a senior technologist with the American Civil Liberties Union, expressed concern with this lack of human touch: “Aside from the software developers and system administrators themselves (which no one yet knows how to automate away), the things that GTAS aims to do look like they could be run mostly ‘on autopilot’ if the purchasers/deployers choose to operate it in that manner.” But Gillmor cautioned that even including a human in the loop could be a red herring when it comes to accountability: “Even if such a high-quality human oversight scheme were in place by design in the GTAS software and contributed modules (I see no indication that it is), it’s free software, so such a constraint could be removed. Countries where labor is expensive (or controversial, or potentially corrupt, etc) might be tempted to simply edit out any requirement for human intervention before deployment.”

“Countries where labor is expensive might be tempted to simply edit out any requirement for human intervention.”

For the surveillance-averse, consider the following: Would you rather a group of government administrators, who meet in secret and are exempt from disclosure, decide who is unfit to fly? Or would it be better for a computer, accountable only to its own code, to make that call? It’s hard to feel comfortable with the very concept of profiling, a practice that so easily collapses into prejudice rather than vigilance. But at least with uniformed government employees doing the eyeballing, we know who to blame when, say, a woman in a headscarf is needlessly hassled, or a man with dark skin is pulled aside for an extra pat-down.

If you ask DHS, this is a categorical win-win for all parties involved. Foreign governments are able to enjoy a higher standard of security screening; the United States gains some measure of confidence about the millions of foreigners who enter the country each year; and passengers can drink their complimentary beverage knowing that the person next to them wasn’t flagged as a terrorist by DataRobot’s algorithm. But watchlists, among the most notorious features of post-9/11 national security mania, are of questionable efficacy and dubious legality. A 2014 report by The Intercept pegged the U.S. Terrorist Screening Database, an FBI data set from which the no-fly list is excerpted, at roughly 680,000 entries, including some 280,000 individuals with “no recognized terrorist group affiliation.” That same year, a U.S. district court judge ruled in favor of an ACLU lawsuit, declaring the no-fly list unconstitutional. The list could only be used again if the government improved the mechanism through which people could challenge their inclusion on it — a process that, at the very least, involved human government employees, convening and deliberating in secret.

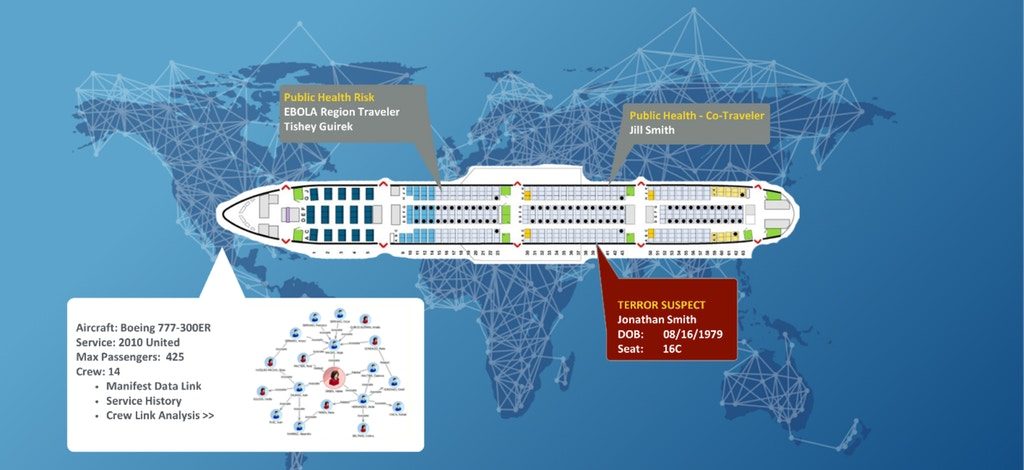

Diagram from a Department of Homeland Security technical document illustrating how GTAS might visualize a potential terrorist onboard during the screening process. Document: DHS

But what if you’re one of the inevitable false positives? Machine learning and behavioral prediction is already widespread; The Intercept reported earlier this year that Facebook is selling advertisers on its ability to forecast and pre-empt your actions. The consequences of botching consumer surveillance are generally pretty low: If a marketing algorithm mistakenly predicts your interest in fly fishing where there is none, the false positive is an annoying waste of time. The stakes at the airport are orders of magnitude higher.

What happens when DHS’s crystal ball gets it wrong — when the machine creates a prediction with no basis in reality and an innocent person with no plans to “join a terrorist group overseas” is essentially criminally defamed by a robot? Civil liberties advocates not only worry that such false positives are likely, possessing a great potential to upend lives, but also question whether such a profoundly damning prediction is even technologically possible. According to DHS itself, its predictive software would have relatively little information upon which to base a prognosis of impending terrorism.

Even from such mundane data inputs, privacy watchdogs cautioned that prejudice and biases always follow — something only worsened under the auspices of self-teaching artificial intelligence. Faiza Patel, co-director of the Brennan Center’s Liberty and National Security Program, told The Intercept that giving predictive abilities to watchlist software will present only the veneer of impartiality. “Algorithms will both replicate biases and produce biased results,” Patel said, drawing a parallel to situations in which police are algorithmically allocated to “risky” neighborhoods based on racially biased crime data, a process that results in racially biased arrests and a checkmark for the computer. In a self-perpetuating bias machine like this, said Patel, “you have all the data that’s then affirming what the algorithm told you in the first place,” which creates “a kind of cycle of reinforcement just through the data that comes back.” What kind of people should get added to a watchlist? The ones who resemble those on the watchlist.

What kind of people should get added to a watchlist? The ones who resemble those on the watchlist.

Indeed, DHS’s system stands to deliver a computerized turbocharge to the bias that is already endemic to the American watchlist system. The overview document for the the Delphic profiling tool made repeated references to the fact that it will create a feedback loop of sorts. The new system “shall automatically augment Watch List data with confirmed ‘positive’ high risk passengers,” one page read, with quotation marks doing some very real work. The software’s predictive abilities “shall be able to improve over time as the system feeds actual disposition results, such as true and false positives,” said another section. Given that the existing watchlist framework has ensnared countless thousands of innocent people , the notion of “feeding” such “positives” into a machine that will then search even harder for that sort of person is downright dangerous. It also becomes absurd: When the criteria for who is “risky” and who isn’t are kept secret, it’s quite literally impossible for anyone on the outside to tell what is a false positive and what isn’t. Even for those without civil libertarian leanings, the notion of an automatic “bad guy” detector that uses a secret definition of “bad guy” and will learn to better spot “bad guys” with every “bad guy” it catches would be comical were it not endorsed by the federal government.

For those troubled by the fact that this system is not only real but currently being tested by an American company, the fact that neither the government nor DataRobot will reveal any details of the program is perhaps the most troubling of all. When asked where the predictive watchlist prototype is being tested, the DHS tech directorate spokesperson, John Verrico, told The Intercept, “I don’t believe that has been determined yet,” and stressed that the program was meant for use with foreigners. Verrico referred further questions about test location and which “risk criteria” the algorithm will be trained to look for back to DataRobot. Libby Botsford, a DataRobot spokesperson, initially told The Intercept that she had “been trying to track down the info you requested from the government but haven’t been successful,” and later added, “I’m not authorized to speak about this. Sorry!” Subsequent requests sent to both DHS and DataRobot were ignored.

Verrico’s assurance — that the watchlist software is an outward-aiming tool provided to foreign governments, not a means of domestic surveillance — is an interesting feint given that Americans fly through non-American airports in great numbers every single day. But it obscures ambitions much larger than GTAS itself: The export of opaque, American-style homeland security to the rest of the world and the hope of bringing every destination in every country under a single, uniform, interconnected surveillance framework. Why go through the trouble of sifting through the innumerable bodies entering the United States in search of “risky” ones when you can move the whole haystack to another country entirely? A global network of terrorist-scanning predictive robots at every airport would spare the U.S. a lot of heavy, politically ugly lifting.

“Automation will exacerbate all of the worst aspects of the watchlisting system.”

Predictive screening further shifts responsibility. The ACLU’s Gillmor explained that making these tools available to other countries may mean that those external agencies will prevent people from flying so that they never encounter DHS at all, which makes DHS less accountable for any erroneous or damaging flagging, a system he described as “a quiet way of projecting U.S. power out beyond U.S. borders.” Even at this very early stage, DHS seems eager to wipe its hands of the system it’s trying to spread around the world: When Verrico brushed off questions of what the system would consider “risky” attributes in a person, he added in his email that “the risk criteria is being defined by other entities outside the U.S., not by us. I would imagine they don’t want to tell the bad guys what they are looking for anyway. ;-)” DHS did not answer when asked whether there were any plans to implement GTAS within the United States.

Then there’s the question of appeals. Those on DHS’s current watchlists may seek legal redress; though the appeals system is generally considered inadequate by civil libertarians, it offers at least a theoretical possibility of removal. The documents surrounding DataRobot’s predictive modeling contract make no mention of an appeals system for those deemed risky by an algorithm, nor is there any requirement in the DHS overview document that the software must be able to explain how it came to its conclusions. Accountability remains a fundamental problem in the fields of machine learning and computerized prediction, with some computer scientists adamant that an ethical algorithm must be able to show its work, and others objecting on the grounds that such transparency compromises the accuracy of the predictions.

Gadeir Abbas, an attorney with the Council on American-Islamic Relations, who has spent years fighting the U.S. government in court over watchlists, saw the DHS software as only more bad news for populations already unfairly surveilled. The U.S. government is so far “not able to generate a single set of rules that have any discernible level of effectiveness,” said Abbas, and so “the idea that they’re going to automate the process of evolving those rules is another example of the technology fetish that drives some amount of counterterrorism policy.”

The entire concept of making watchlist software capable of terrorist predictions is mathematically doomed, Abbas added, likening the system to a “crappy Minority report. … Even if they make a really good robot, and it’s 99 percent accurate,” the fact that terror attacks are “exceedingly rare events” in terms of naked statistics means you’re still looking at “millions of false positives. … Automation will exacerbate all of the worst aspects of the watchlisting system.”

The ACLU’s Gillmor agreed that this mission is simply beyond what computers are even capable of:

For very-low-prevalence outcomes like terrorist activity, predictive systems are simply likely to get it wrong. When a disease is a one-in-a-million likelihood, the surest bet is a negative diagnosis. But that’s not what these systems are designed to do. They need to “diagnose” some instances positively to justify their existence. So, they’ll wrongly flag many passengers who have nothing to do with terrorism, and they’ll do it on the basis of whatever meager data happens to be available to them.

Predictive software is not just the future, but the present. Its expansion into the way we shop, the way we’re policed, and the way we fly will soon be commonplace, even if we’re never aware of it. Designating enemies of the state based on a crystal ball locked inside a box represents a grave, fundamental leap in how societies appraise danger. The number of active, credible terrorists-in-waiting is an infinitesimal slice of the world’s population. The number of people placed on watchlists and blacklists is significant. Letting software do the sorting — no matter how smart and efficient we tell ourselves it will be — will likely do much to worsen this inequity.

__________________________________________________

Related:

- New Law Could Give U.K. Unconstitutional Access to Americans’ Personal Data, Human Rights Groups Warn

- Amazon’s Accent Recognition Technology Could Tell the Government Where You’re From

- Google’s “Smart City of Surveillance” Faces New Resistance in Toronto

- The Government Wants Airlines to Delay Your Flight So They Can Scan Your Face

Sam Biddle – sam.biddle@theintercept.com

Go to Original – theintercept.com

DISCLAIMER: The statements, views and opinions expressed in pieces republished here are solely those of the authors and do not necessarily represent those of TMS. In accordance with title 17 U.S.C. section 107, this material is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. TMS has no affiliation whatsoever with the originator of this article nor is TMS endorsed or sponsored by the originator. “GO TO ORIGINAL” links are provided as a convenience to our readers and allow for verification of authenticity. However, as originating pages are often updated by their originating host sites, the versions posted may not match the versions our readers view when clicking the “GO TO ORIGINAL” links. This site contains copyrighted material the use of which has not always been specifically authorized by the copyright owner. We are making such material available in our efforts to advance understanding of environmental, political, human rights, economic, democracy, scientific, and social justice issues, etc. We believe this constitutes a ‘fair use’ of any such copyrighted material as provided for in section 107 of the US Copyright Law. In accordance with Title 17 U.S.C. Section 107, the material on this site is distributed without profit to those who have expressed a prior interest in receiving the included information for research and educational purposes. For more information go to: http://www.law.cornell.edu/uscode/17/107.shtml. If you wish to use copyrighted material from this site for purposes of your own that go beyond ‘fair use’, you must obtain permission from the copyright owner.

Read more

Click here to go to the current weekly digest or pick another article:

WHISTLEBLOWING - SURVEILLANCE: